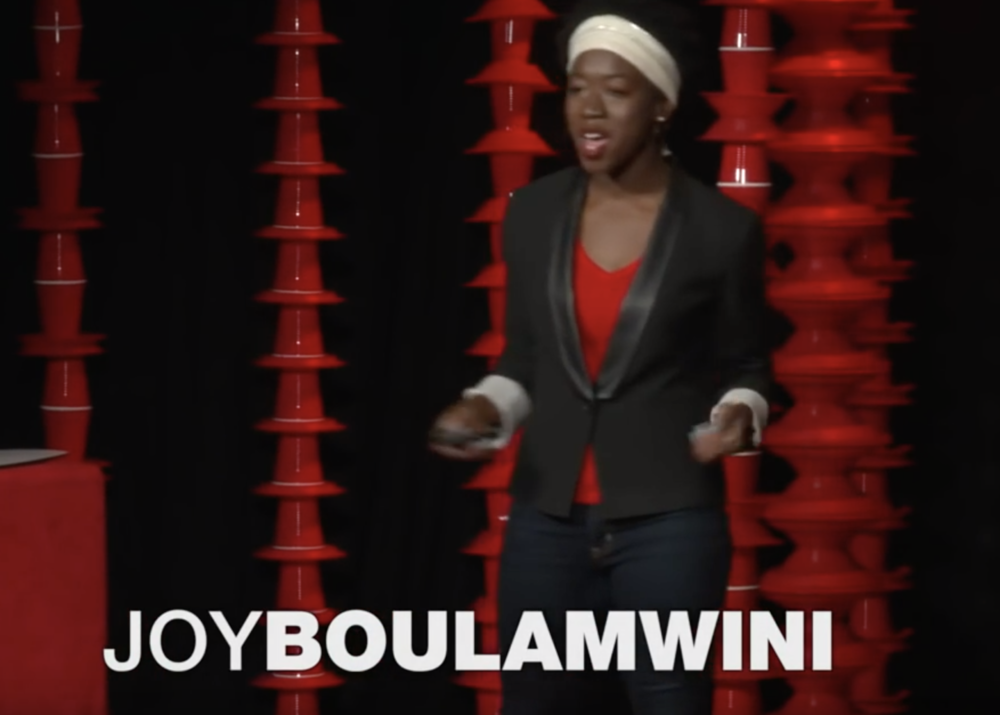

MIT grad student Joy

Buolamwini was working with facial analysis software when she noticed a

problem: the software didn’t detect her face — because the people who

coded the algorithm hadn’t taught it to identify a broad range of skin

tones and facial structures. LINK

Now she’s on a mission to fight bias in machine learning, a phenomenon she calls the “coded gaze.” It’s an eye-opening talk about the need for accountability in coding … as algorithms take over more and more aspects of our lives.

Note from SunShower: our programs can help you develop your ability to track your biases.